There was once a time when volumetric results have been hid from everybody on a movie degree excluding the VFX supervisors huddled round grainy, low-resolution preview displays. You have to shoot a fancy scene with enveloping fog swirled via historical forests, crackling embers danced in haunted corridors, and airy magic wove round a sorcerer’s workforce. But no person on set noticed a unmarried wisp till post-production.

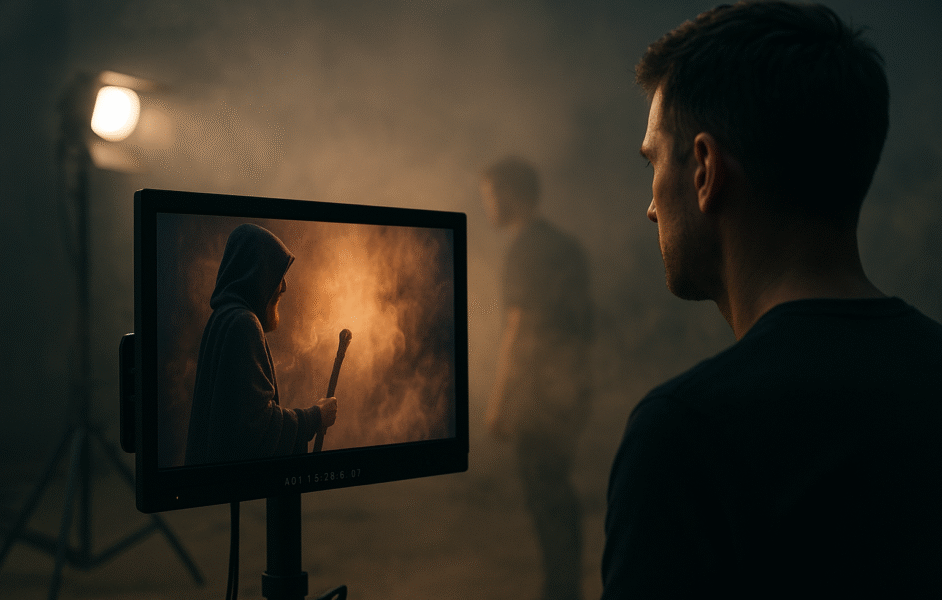

The manufacturing staff watched inert setting, and actors delivered performances towards clean grey partitions, tasked with imagining drifting mud motes or seething smoke. All of that modified when real-time volumetrics emerged from analysis labs into manufacturing studios, lifting the veil on atmospheres that breathe and reply to the digicam’s gaze as scenes spread. Nowadays’s filmmakers can sculpt and refine atmospheric depths right through the shoot itself, rewriting how cinematic worlds are constructed and the way narratives take form in entrance of—and inside—the lens.

In the ones conventional workflows, administrators depended on their instincts and reminiscence, conjuring visions of smoky haze or crackling fireplace of their minds as cameras rolled. Low-resolution proxies (lo-fi particle checks and simplified geometric volumes) stood in for the general results, and best after lengthy nights in render farms would the total volumetric textures seem.

Actors carried out towards darkened LED partitions or inexperienced displays, squinting at faded glows or summary silhouettes, their illusions tethered to technical diagrams as an alternative of the tangible atmospheres they’d inhabit on movie. After manufacturing wrapped, render farms worked for hours or days to supply high-resolution volumetric scans of smoke swirling round shifting items, fireplace embers reacting to winds, or magical flares trailing a hero’s gesture. Those in a single day processes presented bad lags in comments loops, locking down inventive possible choices and leaving little room for spontaneity.

Studios like Disney pioneered LED Stagecraft for The Mandalorian, mixing reside LED partitions with pre-recorded volumetric simulations to trace at immersive environments. Even ILMxLAB’s state of the art LED quantity chambers depended on approximations, inflicting administrators to second-guess inventive selections till ultimate composites arrived.

When real-time volumetric ray-marching demos via NVIDIA stole the spotlight at GDC, it wasn’t only a technical exhibit, it was once a revelation that volumetric lighting fixtures, smoke, and debris may just reside within a sport engine viewport fairly than hidden in the back of render-farm partitions. Unreal Engine’s built-in volumetric cloud and fog systems additional proved that those results may just circulation at cinematic constancy with out crunching in a single day budgets. , when an actor breathes out and watches a wisp of mist curl round their face, the efficiency transforms. Administrators pinch the air, soliciting for denser fog or brighter embers, with comments delivered right away. Cinematographers and VFX artists, as soon as separated via departmental partitions, now paintings facet via facet on a unmarried, dwelling canvas, sculpting mild and particle habits like playwrights improvising on opening evening.

But maximum studios nonetheless dangle to offline-first infrastructures designed for an international of affected person, frame-by-frame renders. Billions of information issues from uncompressed volumetric captures rain down on garage arrays, inflating budgets and burning cycles. {Hardware} bottlenecks stall inventive iteration as groups wait hours (and even days) for simulations to converge. In the meantime, cloud invoices balloon as terabytes shuffle from side to side, prices regularly explored too past due in a manufacturing’s lifecycle.

In lots of respects, this marks the denouement for siloed hierarchies. Actual-time engines have confirmed that the road between efficiency and put up is now not a wall however a gradient. You’ll see how this innovation in real-time rendering and simulation works right through the presentation Real-Time Live at SIGGRAPH 2024. This exemplifies how real-time engines are enabling extra interactive and rapid post-production processes. Groups aware of handing off a locked-down collection to the following division now collaborate at the similar shared canvas, similar to a degree play the place fog rolls in sync with a personality’s gasp, and a visible impact pulses on the actor’s heartbeat, all choreographed at the spot.

Volumetrics are greater than atmospheric ornament; they represent a brand new cinematic language. A tremendous haze can replicate a personality’s doubt, thickening in moments of disaster, whilst sparkling motes may scatter like fading recollections, pulsing in time with a haunting ranking. Microsoft’s experiments in reside volumetric seize for VR narratives display how environments can department and respond to user actions, suggesting that cinema can also shed its fastened nature and change into a responsive revel in, the place the sector itself participates in storytelling.

At the back of each and every stalled volumetric shot lies a cultural inertia as ambitious as any technical limitation. Groups educated on batch-rendered pipelines are regularly cautious of trade, protecting onto acquainted schedules and milestone-driven approvals. But, every day spent in locked-down workflows is an afternoon of misplaced inventive chance. The following technology of storytellers expects real-time comments loops, seamless viewport constancy, and playgrounds for experimentation, gear they already use in gaming and interactive media.

Studios unwilling to modernize chance extra than simply inefficiency; they chance dropping ability. We already see the have an effect on, as Younger artists, steeped in Cohesion, Unreal Engine, and AI-augmented workflows, view render farms and noodle-shredding device as relics. As Disney+ blockbusters continue to showcase LED volume stages, those that refuse to evolve will in finding their be offering letters left unopened. The dialog shifts from “Are we able to do that?” to “Why aren’t we doing this?”, and the studios that resolution easiest will form the following decade of visible storytelling.

Amid this panorama of inventive longing and technical bottlenecks, a wave of rising real-time volumetric platforms started to reshape expectancies. They introduced GPU-accelerated playback of volumetric caches, on-the-fly compression algorithms that decreased knowledge footprints via orders of magnitude, and plugins that built-in seamlessly with present virtual content material advent gear. They embraced AI-driven simulation guides that predicted fluid and particle habits, sparing artists from handbook keyframe exertions. Crucially, they supplied intuitive interfaces that handled volumetrics as an natural element of the artwork route procedure, fairly than a specialised post-production job.

Studios can now sculpt atmospheric results in live performance with their narrative beats, adjusting parameters in genuine time with out leaving the modifying suite. In parallel, networked collaboration areas emerged, enabling dispensed groups to co-author volumetric scenes as though they have been pages in a shared script. Those inventions are the signal of departure from legacy constraints, blurring the road between pre-production, major images, and postproduction sprints.

Whilst those platforms spoke back rapid ache issues, additionally they pointed towards a broader imaginative and prescient of content material advent the place volumetrics reside natively inside real-time engines at cinematic constancy. Probably the most forward-thinking studios known that deploying real-time volumetrics required greater than device upgrades: it demanded cultural shifts. They see that real-time volumetrics constitute greater than a tech step forward, they convey a redefinition of cinematic storytelling.

When on-set atmospheres change into dynamic companions in efficiency, narratives acquire intensity and nuance that have been as soon as unimaginable. Ingenious groups release new chances for improvisation, collaboration, and emotional resonance, guided via the dwelling language of volumetric parts that reply to aim and discovery. But figuring out this attainable would require studios to confront the hidden prices in their offline-first previous: knowledge burdens, workflow silos, and the danger of dropping the following technology of artists.

The trail ahead lies in weaving real-time volumetrics into the material of manufacturing observe, aligning gear, ability, and tradition towards a unified imaginative and prescient. It’s a call for participation to reconsider our trade, to dissolve boundaries between thought and symbol, and to include an generation the place each and every body pulses with chances that emerge these days, authored via each human creativity and real-time era.

Source link